Serverless Fan-Out in Vaccine Research

Discovering new vaccines involves intensive data science: the 3D structure and shape of viruses need to be matched to the 3D structure of hundreds of thousands of proteins that can potentially bind on the virus surface. We will not go into the multiple challenges this problem poses from a methodological point of view, but focus on how to deal with the hundreds of thousands of proteins that enter the arena.

As the saying goes, the cloud is just someone else’s computer, but it does offer serverless computing facilities, an execution model that is very well tailored to extreme peaks of computation e.g. for massive 3D structure comparison or data ingestion for hundreds of thousands of proteins. Working in a serverless mode poses its own architectural problems and in this post, we give some insight into the thought process for the data ingestion.

Ingestion Function

Concretely speaking we were tasked with the ingestion of millions of messages from an external API to an Azure Storage Queue. We started with the approach where we executed the Serverless function through an HTTP endpoint. We call this function the Ingestion Function. The ingestion function would in turn hit an external API to get the messages and try to ingest it in the Queue.

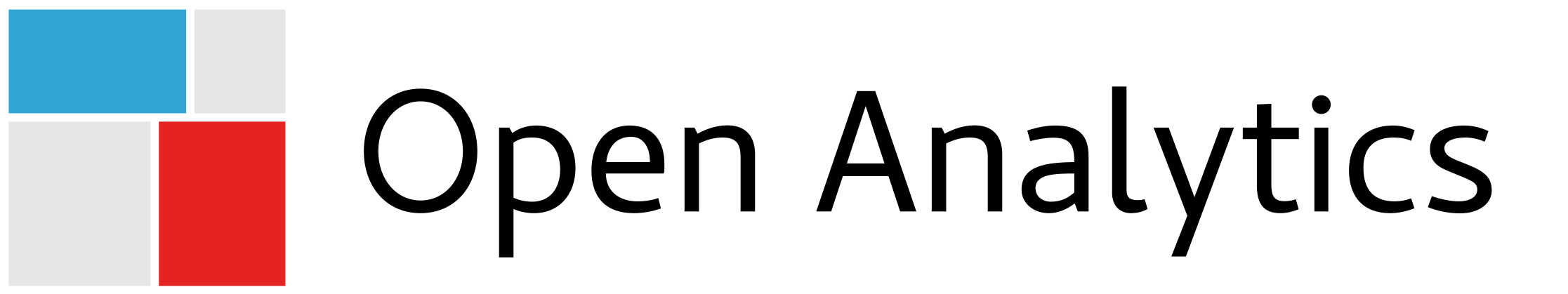

The initial architecture looks as follows:

As one can see, the single ingestion function is a bottleneck as it can only scale up-to specific limits. Also, we are not utilizing the strength of the serverless function paradigm, which is multiple, parallel, small invocations. Therefore, we decided to go with the approach where we scale up the ingestion serverless to multiple invocations so that it can scale as much as needed.

Chunking Function

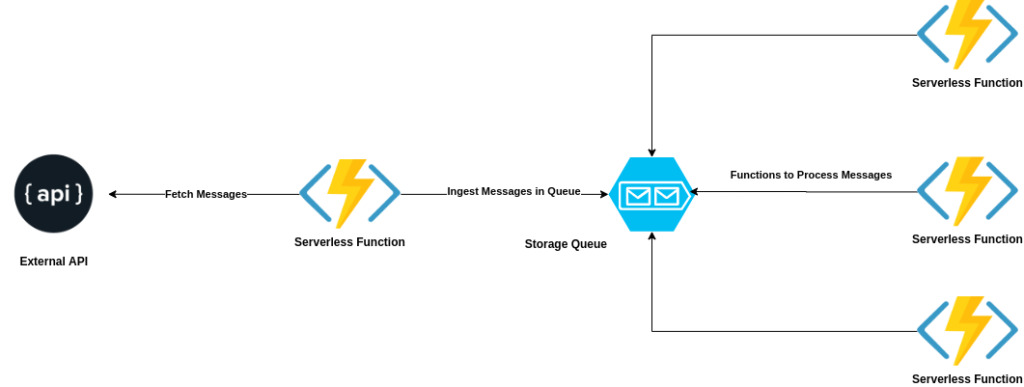

The idea was to divide the total number of messages into multiple chunks (e.g. of 5000) and then pass those messages to the ingestion function so that it can finally ingest those messages to the Queue.

In order to do so, we created another serverless function, which we call the Chunking Function, to divide the messages into chunks using this helper function:

def chunking_of_list(data):

"""return the list in 5000 chunks"""

return [data[x:x+5000] for x in range(0, len(data), 5000)]

and then uploaded each chunk in a separate file into Azure Blob Storage using this code:

def upload_chunks(idLists):

st = storage.Storage(container_name=constant.CHUNK_CONTAINER)

for index, singleList in enumerate(idLists):

logging.info('Uploading file{0} to the {1} blob storage' .format(index, constant.CHUNK_CONTAINER))

st.upload_chunk('file' + str(index), singleList)

Final Architecture

Finally, we set up the ingestion function to listen to the Azure Blob Storage file upload events. As soon as the file gets uploaded, the ingestion function will download the file, read it, and ingest the messages into Queue. As desired we now have multiple invocations of ingestion functions to work in parallel therefore, we achieved scalability.

Here is how the final architecture looked like:

We essentially followed a fan-out architecture, where we fan out our workload to multiple Serverless invocations instead of one.